How Operating Systems Manage Memory and Processes

Understanding how operating systems function requires delving into memory and process management. Efficient memory management is the bedrock of system efficiency, dictating how data is stored and accessed. Process management, on the other hand, involves scheduling and context switching, ensuring smooth multitasking. Operating systems dynamically allocate and deallocate resources based on demand, optimizing resource utilization. This blog post explores these core concepts, providing practical tips and best practices for optimizing system performance and ensuring a responsive computing experience. Learn how efficient resource handling contributes to overall system stability and speed.Okay, I will create a content section for your blog post that meets all of your requirements. Here’s the content: html

Understanding Memory Management: The Foundation Of System Efficiency

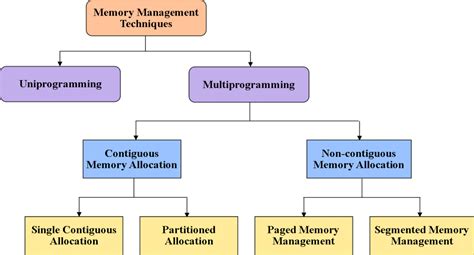

Memory management is a crucial function of any operating system. It directly impacts the performance and stability of a computer system. Efficient memory management ensures that multiple processes can run concurrently without interfering with each other, and that the system remains responsive even under heavy load. The operating system is responsible for allocating and deallocating memory space to various programs, keeping track of available memory, and preventing memory leaks or corruption. Understanding how operating systems handle memory is fundamental to understanding overall system efficiency.

| Technique | Description | Advantages | Disadvantages |

|---|---|---|---|

| Contiguous Allocation | Each process is allocated a single, contiguous block of memory. | Simple to implement. | Can lead to external fragmentation. |

| Paging | Memory is divided into fixed-size blocks called pages. Processes are divided into pages as well. | Eliminates external fragmentation. | Can lead to internal fragmentation. |

| Segmentation | Memory is divided into logical segments of varying sizes. | Supports logical organization of memory. | Can lead to external fragmentation. |

| Virtual Memory | Allows processes to use more memory than physically available by swapping data between RAM and disk. | Allows for larger processes and better memory utilization. | Slower due to disk access. |

Without effective memory management, systems would be plagued by crashes, slow performance, and the inability to run multiple applications simultaneously. Modern operating systems employ sophisticated techniques to optimize memory usage. These techniques include virtual memory, paging, and segmentation, all designed to maximize the available memory and prevent processes from interfering with each other. The goal is to create an environment where applications can run smoothly and efficiently, regardless of the underlying hardware limitations.

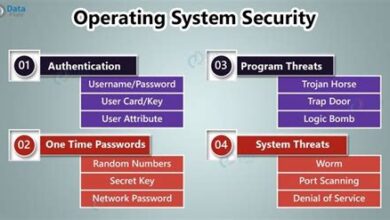

The operating system also plays a vital role in protecting memory. It prevents processes from accessing memory locations that do not belong to them, thus ensuring system stability and security. This protection is typically achieved through memory access control mechanisms. When a process attempts to access an unauthorized memory region, the operating system intervenes and terminates the process, preventing potential damage to the system. This is a critical aspect of maintaining a secure and reliable computing environment.

- Key Aspects of Memory Management:

- Allocation: Assigning memory blocks to processes.

- Deallocation: Releasing memory blocks when they are no longer needed.

- Protection: Preventing processes from accessing unauthorized memory regions.

- Virtual Memory: Using disk space as an extension of RAM.

- Fragmentation Management: Reducing wasted memory space.

- Swapping: Moving processes between RAM and disk to optimize memory usage.

Furthermore, advanced memory management techniques involve dynamic memory allocation, where memory is allocated to processes during runtime as needed. This contrasts with static allocation, where memory is assigned at compile time. Dynamic allocation provides greater flexibility and efficiency, allowing processes to request memory as they need it and release it when it’s no longer required. This dynamic approach is essential for handling varying workloads and unpredictable memory demands.

Process Management: Scheduling And Context Switching Explained

In the realm of operating systems, process management is a critical function that ensures applications run smoothly and efficiently. It involves managing the lifecycle of processes, from creation to termination, and allocating system resources effectively. A key aspect of process management is scheduling, which determines the order in which processes are executed. Understanding how operating systems handle scheduling and context switching is essential for grasping the core principles of system performance. The how operating system manages these processes directly impacts the responsiveness and stability of the entire system.

Effective scheduling algorithms are designed to optimize various system metrics, such as CPU utilization, throughput, and response time. Different algorithms cater to different system requirements; some prioritize fairness, ensuring that all processes get a fair share of CPU time, while others focus on minimizing response time for interactive applications. Understanding these trade-offs is crucial for selecting the appropriate scheduling strategy for a given environment. Let’s delve deeper into some common scheduling algorithms and their characteristics.

Scheduling Algorithms

Scheduling algorithms are at the heart of process management, dictating how the OS allocates CPU time to different processes. These algorithms vary in complexity and efficiency, each suited to different types of workloads. Understanding these algorithms is crucial for optimizing system performance and ensuring fairness among processes. Here are a few notable scheduling algorithms:

- First-Come, First-Served (FCFS): Processes are executed in the order they arrive. Simple to implement but can lead to long waiting times for short processes if a long process arrives first.

- Shortest Job First (SJF): Processes with the shortest execution time are executed first. Minimizes average waiting time but requires knowing the execution time in advance.

- Priority Scheduling: Processes are assigned priorities, and the highest priority process is executed first. Can lead to starvation if low-priority processes are continuously preempted.

- Round Robin: Each process is given a fixed time slice (quantum) of CPU time. After the time slice expires, the process is moved to the back of the ready queue. Provides fairness and responsiveness.

- Multilevel Queue Scheduling: Multiple queues with different scheduling algorithms are used. Processes are assigned to a queue based on their characteristics (e.g., interactive vs. batch).

The selection of a scheduling algorithm depends heavily on the specific requirements of the system. For instance, real-time systems often require algorithms that can guarantee deadlines, whereas interactive systems benefit from algorithms that minimize response time. The how operating system is configured is very important at this point.

Context switching is another essential aspect of process management, allowing the OS to switch between different processes efficiently. This involves saving the state of the current process and restoring the state of the next process to be executed. The efficiency of context switching directly impacts the overall performance of the system. The following table summarizes key aspects of context switching:

| Aspect | Description | Impact |

|---|---|---|

| State Saving | Saving the current process’s registers, program counter, and other relevant information. | Ensures the process can be resumed correctly later. |

| State Restoring | Loading the saved state of the next process to be executed. | Allows the next process to start execution from where it left off. |

| Overhead | The time taken to perform state saving and restoring. | Impacts system performance; minimizing overhead is crucial. |

| Frequency | The number of context switches per unit of time. | High frequency can lead to increased overhead. |

Context Switching Mechanics

Context switching is a fundamental operation that allows the operating system to efficiently share the CPU among multiple processes. It involves saving the current state of a process so it can be restored later, allowing the process to continue execution as if it had never been interrupted. The speed and efficiency of context switching are critical for maintaining system responsiveness and overall performance. Minimizing the overhead associated with context switching is a key goal in operating system design. The how operating system handles context switching has to be as optimized as possible.

Processes exist in various states throughout their lifecycle, reflecting their current activity and resource requirements. These states are crucial for understanding how the operating system manages and schedules processes effectively.

Process States

Understanding the different states a process can be in is essential for comprehending process management. Each state reflects a different stage in the process’s lifecycle, from creation to completion. The operating system uses these states to manage and schedule processes efficiently.

These process states include:

- New: The process is being created.

- Ready: The process is waiting to be assigned to a processor.

- Running: Instructions are being executed.

- Waiting (Blocked): The process is waiting for some event to occur (e.g., I/O completion or receiving a signal).

- Terminated: The process has finished execution.

The transitions between these states are managed by the operating system’s scheduler, which makes decisions based on various factors, including process priority, resource availability, and scheduling algorithm. Efficient management of process states ensures that the system resources are utilized effectively and that processes are executed in a timely manner. Efficient process management is vital for maintaining a responsive and stable computing environment.

In summary, process management, encompassing scheduling algorithms and context switching, is pivotal for the operational efficiency of any operating system. These mechanisms ensure that resources are allocated judiciously and that the system remains responsive, even under heavy load. The how operating system manages these tasks determines its overall effectiveness.

How Operating Systems Allocate And Deallocate Resources Dynamically

Operating systems are responsible for efficiently managing system resources, including memory, CPU time, and I/O devices. Dynamic resource allocation and deallocation are critical functions that enable the OS to adapt to changing application demands. How operating systems handle these processes directly impacts overall system performance and stability. Understanding these mechanisms is essential for anyone involved in software development or system administration.

Dynamic allocation allows the operating system to grant resources to processes as needed during runtime. This contrasts with static allocation, where resources are assigned at compile time or system startup. Dynamic allocation provides greater flexibility and efficiency, allowing the system to better utilize available resources and accommodate varying workloads.

| Resource Type | Allocation Method | Deallocation Trigger |

|---|---|---|

| Memory | Heap allocation (e.g., malloc, new) | Explicit deallocation (e.g., free, delete) or garbage collection |

| CPU Time | Scheduling algorithms (e.g., Round Robin, Priority Scheduling) | Process completion, time slice expiration, or preemption |

| File Descriptors | Open system call | Close system call or process termination |

| Network Sockets | Socket creation system calls | Socket closure or process termination |

Steps for Dynamic Resource Allocation:

- Request Initiation: A process requests a specific resource from the operating system.

- Availability Check: The OS checks if the requested resource is available.

- Allocation: If available, the OS allocates the resource to the process.

- Tracking: The OS updates its internal tables to reflect the allocation.

- Resource Use: The process uses the allocated resource.

- Deallocation Request: Once the process is finished, it requests the resource to be deallocated.

- Deallocation: The OS deallocates the resource, making it available for other processes.

Deallocation is just as important as allocation. When a process no longer needs a resource, it must be returned to the system. Failure to deallocate resources can lead to resource leaks, which degrade performance and can eventually cause system instability. The OS provides mechanisms, such as free() in C or garbage collection in Java, to manage deallocation. Efficient dynamic resource management is a cornerstone of a well-functioning operating system, impacting everything from application responsiveness to overall system throughput.

Okay, I will create the Optimizing System Performance: Practical Tips And Best Practices section for your article, keeping all requirements in mind for SEO optimization and readability. html

Optimizing System Performance: Practical Tips And Best Practices

Improving system performance involves a combination of strategies targeting both memory and process management. Understanding how operating systems handle these aspects is crucial, but applying practical optimizations can significantly enhance overall efficiency. This section focuses on actionable steps and best practices to keep your system running smoothly.

| Bottleneck | Description | Potential Solutions |

|---|---|---|

| Memory Leaks | Unreleased memory accumulates, reducing available RAM. | Regularly restart applications, use memory profiling tools. |

| CPU Overload | Too many processes competing for CPU time. | Identify and terminate resource-intensive processes, optimize code. |

| Disk Thrashing | Excessive swapping between RAM and hard disk. | Increase RAM, optimize virtual memory settings. |

| Fragmented Memory | Memory divided into non-contiguous blocks. | Defragment memory (OS dependent), allocate memory in larger chunks. |

One key area is minimizing unnecessary memory usage. Applications often consume more memory than required, leading to slowdowns, especially on systems with limited RAM. Regularly monitoring memory consumption and closing unused programs can free up valuable resources. Furthermore, optimizing startup processes can prevent unnecessary background processes from hogging memory and CPU cycles.

Process management is equally important. A large number of active processes can strain system resources. Prioritizing essential processes and limiting the number of concurrently running applications can improve responsiveness. Using task manager or similar tools to identify and terminate unresponsive or resource-intensive processes can also provide immediate relief. The concept of process scheduling directly affects system performance, ensuring critical tasks receive necessary resources and can improve overall system stability.

Effective disk management also plays a crucial role. Regularly defragmenting hard drives (especially traditional HDDs) can improve data access times. Additionally, ensuring sufficient free disk space prevents the operating system from struggling to manage virtual memory. Solid-state drives (SSDs) generally don’t require defragmentation, but maintaining adequate free space is still important for optimal performance.

Actionable Steps for System Optimization:

- Regularly monitor memory and CPU usage.

- Close unused applications and background processes.

- Optimize startup programs to reduce boot time and resource consumption.

- Defragment hard drives (HDDs) regularly.

- Ensure sufficient free disk space.

- Update drivers and operating system regularly.

- Consider upgrading hardware (RAM, SSD) for significant performance gains.

Finally, keeping your operating system and drivers up to date is essential. Updates often include performance improvements and bug fixes that can enhance overall system stability and speed. By implementing these practical tips and best practices, you can optimize your system’s performance and ensure a smoother, more efficient computing experience. Remember that regular maintenance and proactive monitoring are key to preventing performance degradation over time, ensuring that how operating systems manage resources remains effective.